Modern AI workloads are extraordinary in scale—thousands of GPUs, terabytes of data, and training runs that can span weeks. Yet for all that sophistication, visibility into how those workloads actually behave remains one of AI’s weakest links.

Performance drops. GPU utilization dips. Iterations slow down. But the “why” often disappears into a haze of partial telemetry and scattered dashboards.

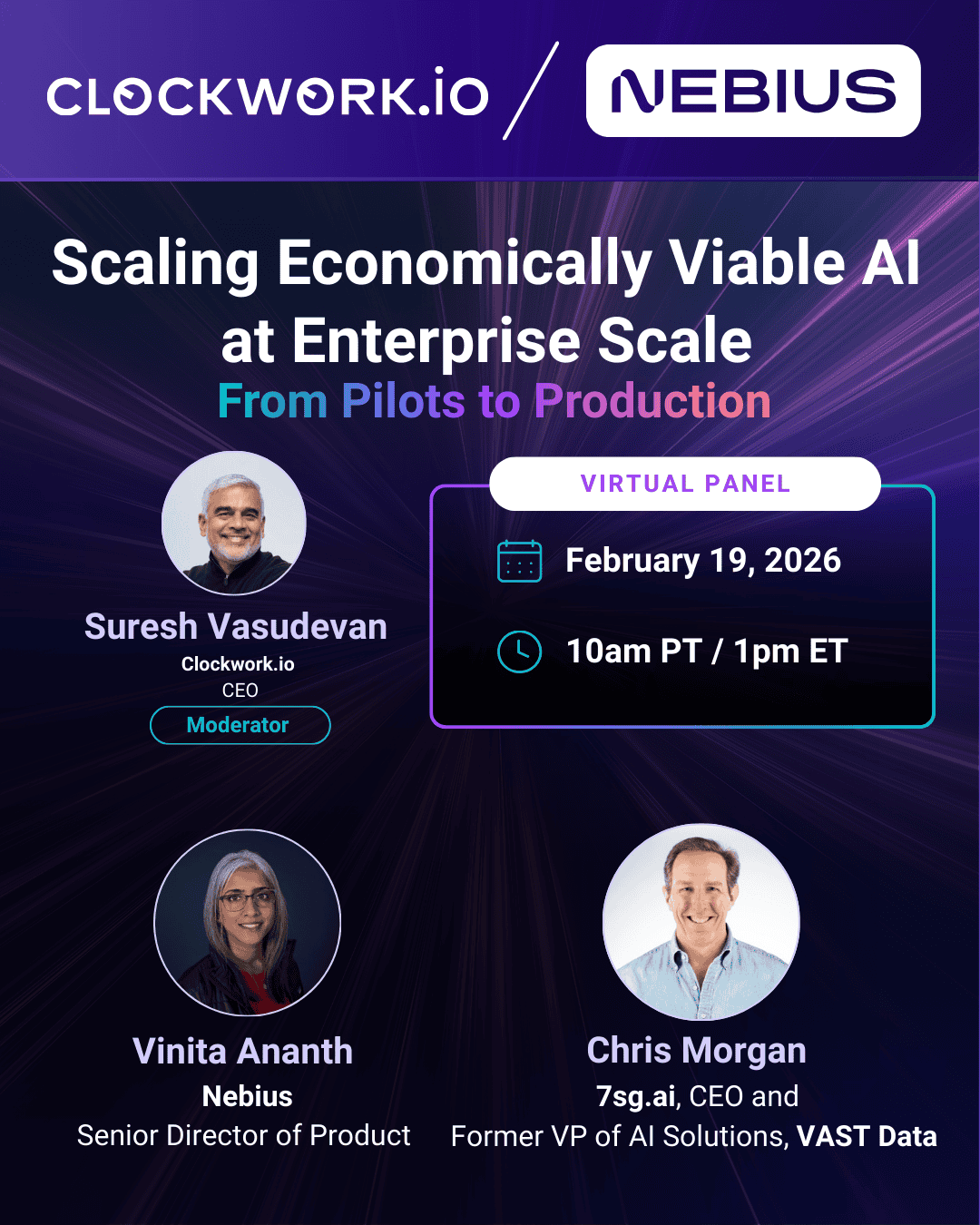

Clockwork.io set out to change that. Its no-code PyTorch monitoring solution delivers per-iteration visibility across both model training and infrastructure—so teams can see inefficiencies as they happen, quantify their impact, and eliminate wasted GPU hours before they spiral into lost time and cost.

The Hidden Cost of Blind Spots in AI Training

Every AI organization faces the same paradox: training workloads have scaled exponentially—but visibility hasn’t kept up.

For AI engineers, every slowdown can feel like guesswork. Is it the data loader? PCIe congestion? Synchronization lag? Debugging means juggling TensorBoard, Grafana, and logs—trying to stitch together disconnected fragments of the truth. The result: brittle instrumentation, slow iteration cycles, and prolonged diagnosis.

For infrastructure engineers, the problem looks different but feels the same. They oversee massive GPU fleets but lack the context to attribute inefficiencies to specific jobs or iterations. Metrics without attribution lead to alerts without action—and endless reactive firefighting.

Across both teams, the hidden cost is staggering: lost GPU hours, longer training cycles, and delayed experimentation. Training inefficiency has become one of the most expensive blind spots in AI.

The Clockwork.io Breakthrough: Training-Aware Visibility

Clockwork.io unifies model-level and infrastructure-level visibility into a single, intelligent layer of visibility that speaks to both sides of the AI workflow.

For AI engineers, it unlocks deep training introspection without code changes—surfacing iteration-level performance metrics automatically and translating them into clear, actionable insights.

For infrastructure engineers, it connects the dots across frameworks, runtimes, and hardware layers—turning what used to be isolated telemetry streams into a continuous picture of performance, efficiency, and root-cause attribution.

Together, these perspectives form a shared operational truth—one that replaces guesswork with measurable, real-time intelligence.

How It Works: A Continuous Feedback Loop for AI Efficiency

Clockwork.io’s architecture runs as a continuous feedback loop that connects data collection, analysis, and monitoring in real time.

- The Data Collector observes training behavior directly at the PyTorch or eBPF layer, capturing fine-grained timing and throughput metrics with minimal overhead.

- The Agent aggregates both training and infrastructure telemetry, computes efficiency insights, and exposes them through Prometheus for seamless integration with Grafana dashboards.

- The Coordinator continuously correlates and updates performance data across agents—quantifying inefficiencies as they occur, so wasted GPU capacity becomes visible, measurable, and preventable.

This unified pipeline turns raw activity into live operational intelligence that teams can act on instantly.

The Portfolio of Visibility Metrics

1. Measured Metrics – The Ground Truth

These metrics capture the essential signals of training and system behavior—iteration times, utilization, and throughput. They form the quantitative foundation for what Clockwork.io reveals.

2. Derived Metrics – Translating Behavior into Insight

By correlating raw telemetry across stack layers, derived metrics reveal the why behind inefficiency: uncovering communication delays, bottlenecks, and underperforming components. They turn complex system dynamics into understandable performance patterns.

3. Impact Metrics – Quantifying the Cost of Inefficiency

Finally, impact metrics translate inefficiency into business value terms—how much GPU time was idle, how much was wasted on redundant computation, and where optimization yields the biggest return.

Together, these layers move visibility beyond measurement into understanding, attribution, and optimization—the pillars of efficient, scalable AI operations.

Detecting and Quantifying Inefficiency in Real Time

Clockwork.io doesn’t just show that inefficiency exists—it tells you where it is, what caused it, and what it costs.

When a performance anomaly occurs—whether a GPU slows down, a link flaps, or data transfer stalls—Clockwork.io automatically surfaces the event, quantifies the impact, and updates time-wasted metrics across your cluster.

The result is a live, quantified view of GPU efficiency that both engineers and operators can understand at a glance. What once required post-mortem analysis now happens in real time—enabling teams to move from reactive firefighting to continuous optimization.

From Data to Clarity: Visualizing Efficiency

Clockwork.io’s Prometheus-backed Grafana dashboards make invisible performance visible.

They bring together iteration timing, anomaly detection, and impact metrics into a single, coherent visualization—showing exactly when and why performance changed.

A typical dashboard reveals:

- Multiple concurrent jobs across distributed hosts

- Clear indicators for anomalies or degraded performance

- Quantified impact in GPU hours lost or throughput reduced

This unified visualization doesn’t just inform—it transforms raw data into actionable understanding, giving both AI and infrastructure engineers a common language for efficiency.

From Reactive to Predictive Optimization

AI and infrastructure engineers share a mission: maximize GPU uptime, throughput, and reliability.

Until now, their worlds have been separated—different dashboards, incomplete data, and constant guesswork.

Clockwork.io changes that.

It provides a no-code, training-aware visibility layer that connects model behavior to system performance seamlessly.

- For AI engineers, that means faster debugging, clearer iteration feedback, and fewer wasted cycles.

- For infrastructure engineers, it means correlated telemetry, measurable efficiency gains, and higher cluster ROI.

By turning per-iteration PyTorch signals into shared operational intelligence, Clockwork.io makes AI systems self-diagnosing, self-optimizing, and transparently efficient.

Experience it firsthand: coming soon!

Closing Thoughts

AI teams are pushing the boundaries of what’s possible—but even the best hardware can’t compensate for what you can’t see.

Clockwork.io doesn’t just monitor GPUs; it illuminates the entire training lifecycle, connecting human intuition with machine-level precision. When every iteration, link, and resource is visible, inefficiency becomes solvable—and every GPU hour counts.

In a world where scale is everything, visibility is leverage.

Clockwork.io gives AI teams exactly that: the power to see, measure, and optimize every step of their journey.